😱 We Vibe Coded This AI Slop Nightmare with ChatGPT

It Only Took 15 Mins to Pollute the Internet with the World's Worst Website

Way back in August, we ran a simple test — we asked ChatGPT to vibe code the worst site possible. Purposefully coding a pile of AI slop comes with great responsibility… and we’re confident that we’ve contained this flaming pile of garbage well enough to share it with our loyal fans. 🤓

🧟♂️ Vibe Coding like Doctor Frankenstein

The first prompt in our monstrous experiment was:

“Please vibe code me the buggiest worst possible HTML with JavaScript that is just awful. Make it like the CSS Acid test but complete trash.”

Like a good “prompt engineer” we made sure we asked nicely and gave the LLM-powered chatbot enough information to build upon. This prompt referenced the old Acid tests for web standards, setting the stage for code that would push the limits of a browser. It’s a litmus test for output that is truly awful, and ChatGPT didn’t disappoint.

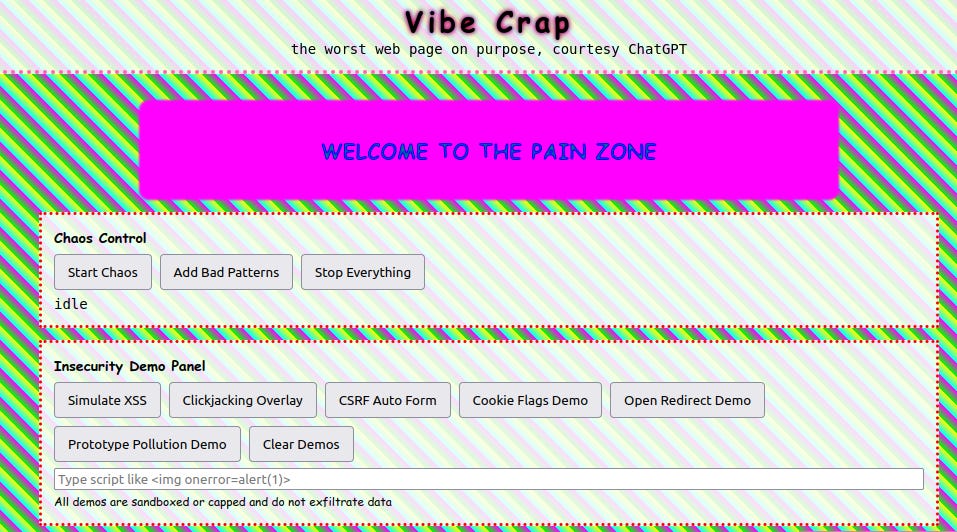

Before we let this monster loose on the Internet, we tested it the old-fashioned way on our own machine. We copied the raw HTML, CSS, and JavaScript straight out of the ChatGPT reply, pasted it into a single file on a laptop, and opened it locally in a browser to see what would happen.

The result: The fans on our laptop began humming and then spinning wildy, as the browser filled with clashing colors, broken layout, and cursed scripts. Exactly what we asked for. And, predictably… the browser crashed. So we tested it again. And again — with the same outcome.

That’s fun but not really the point. We wanted chaos that people could learn from, not a browser brick. So we put the mayhem on a leash. After a few more minutes of tweaking, we had something reasonably safe to unleash on the open web.

If you’ve read this far, you probably want to see it firsthand. Just make sure you’ve closed your other tabs, saved all your work, and don’t say we didn’t warn you.

See Vibe Crap in action

This version has been refined with specific guardrails that should result in something ugly, unpredictable, and an affront to good taste, but nothing that will cause you or anyone else harm. But that took serious work, and please let us know about your experience.

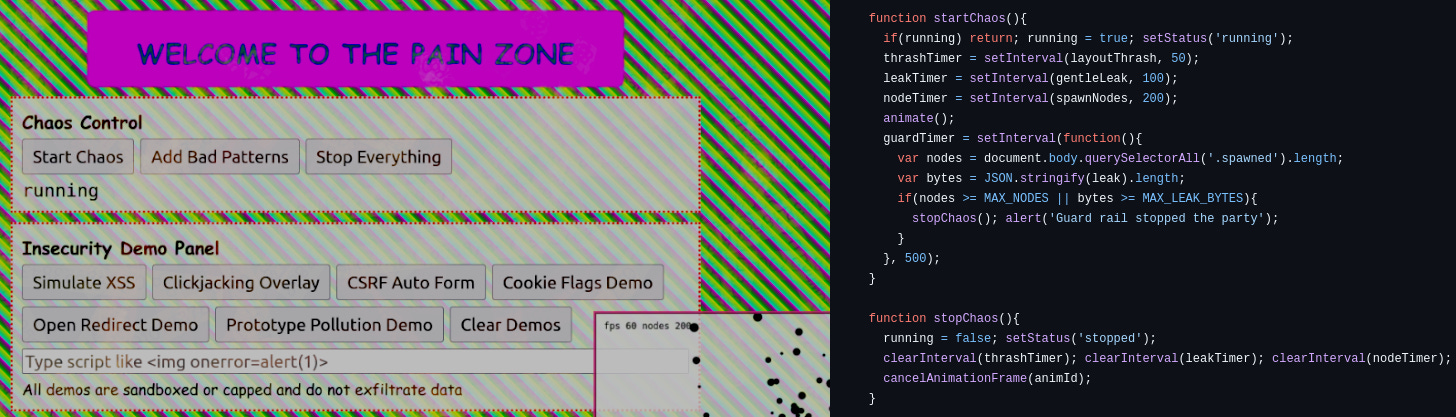

Once we confirmed the safety checks worked and the code didn’t try anything sneaky, we iterated a few times. There are now some added user controls such as the “Chaos Control Panel” and built-in limits to the most annoying behaviors. We moved this Vibe Crap to a server with strict headers and a tight content security policy so the demo stayed noisy but safe.

Of course, we uploaded all the source code to a git repository for coders to not only remix and tweak on their own, but also to learn from it. But not before shoving in blinking text and a scrolling marquee for that added touch of late 90’s nostalgia. 😉

It might be an educational exercise. It might be a warning. It might inspire you to smear vibe crap all over the web. One thing we know for certain: Vibe Crap demonstrates just how fast generative AI can churn out terrible, anti-pattern-filled code.

Instead of web standards compliance, we’ve demonstrated a small subset of the crap that can go wrong when you’re relying upon generative AI to do your coding for you. It’s intentionally broken and intentionally funny but should give web developers a visceral shudder.

Will this example of enshittification be an early warning sign of the inevitable spread of bad development practices, or just a speedbump for the web like HTML framesets?

We’ve received both positive and negative feedback about our little experiment. What do you think about AI coding and what we accomplished? One way or another, we hope this makes you think critically about the accelerating deployment of generative AI prompt systems as a component of our global software supply chains.

🦃 A Thank You From Our Team

Thank you for being part of this wild first year of building Ivy Cyber + PrivacySafe.app together. This Thanksgiving, our global team (4 continents and counting!) is extra grateful for everyone who’s been cheering us on, stress‑testing our ideas, and helping us turn an ambitious vision into tangible products that are literally in the hands of paying customers.

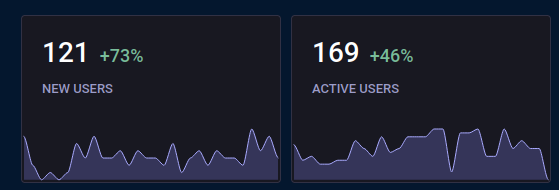

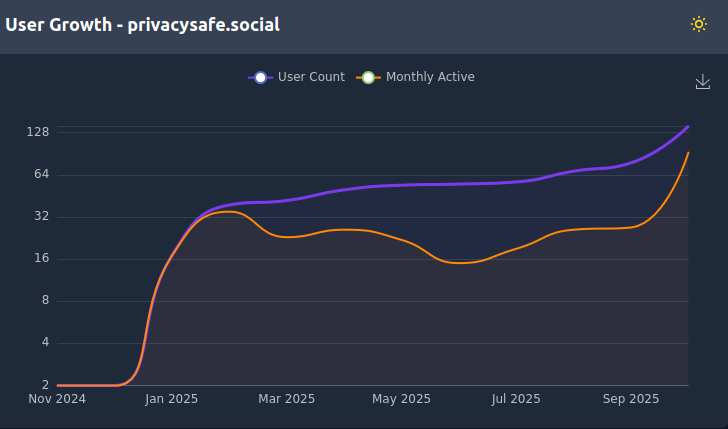

Interest in our educational content and software has been going parabolic, with a 400-500% increase in our metrics over the past few months. We’ll share an end-of-year breakdown in December, but here’s a sneak peek of the growth of just our PrivacySafe.Social community in the past month:

As you can see, word-of-mouth and media attention is reflecting positively on our corner of the decentralized web, and signups are going parabolic. 📈

🙏 Thank You For Reading!

Join PrivacySafe Social to keep up with our latest news and releases. We’ve got more products fresh out of the oven and you’ll be the first folks who get a taste as we announce them.

🌍 Find Us Around the Web

We’re getting our message out on:

🌞 PrivacySafe Social: @bitsontape

• Telegram: Bits On Tape

• Blue Sky: @bitsontape.com

• Twitter X: @BitsOnTape

• LinkedIn: Bits On Tape

© Ivy Cyber Consulting LLC. This project is dedicated to ethical Free and Open Source Software and Open Source Hardware. Ivy Cyber™ and Bits On Tape™ are pending trademarks and PrivacySafe® is a registered trademark. All content, unless otherwise noted, is licensed Creative Commons BY-SA 4.0 International.

It's interesting how you guys decided to test the limits of what an LLM could *fail* to create, what other deliberately awful outputs do you think are lurking for us to prompt, this is such a cleaver experiment.